Electrostatic potential minimization¶

The main purpose of iwopy is to be helpful when attacking more complicated optimization tasks than the minimization of simple analytical functions. As an example, we consider the minimization of an inverse distance type potential for a fully coupled system of \(N\) particles in two dimensions. We can imagine such a system to be composed of \(N\) individual point charges, each of them carrying the same electric unit charge. The potential that we want to minimize is then represented by

denotes the two dimensional position vector of charge \(i\) and the sum is over all unequal index pairs. This potential favours large distances between charges, hence we need to constrain them to a certain area for a meaningful solution. We confine them to a circle of radius \(R\) by imposing \(N\) constraints,

These are the required imports for this example:

In [1]:

import numpy as np

import matplotlib.pyplot as plt

from iwopy import Problem, Objective, Constraint

from iwopy.interfaces.pymoo import Optimizer_pymoo

We start by creating a specific class that describes the variables of our problem:

In [2]:

class ChargesProblem(Problem):

def __init__(self, xy_init, radius):

super().__init__(name="charges_problem")

self.xy_init = xy_init

self.n_charges = len(xy_init)

self.radius = radius

def var_names_float(self):

"""Defines the variable names"""

vnames = []

for i in range(self.n_charges):

vnames += [f"x{i}", f"y{i}"]

return vnames

def initial_values_float(self):

"""Returns inital values, as given to constructor"""

return self.xy_init.reshape(2 * self.n_charges)

def min_values_float(self):

"""Minimal values for a square of size 2*radius"""

return np.full(2 * self.n_charges, -self.radius)

def max_values_float(self):

"""Maximal values for a square of size 2*radius"""

return np.full(2 * self.n_charges, self.radius)

def apply_individual(self, vars_int, vars_float):

"""Returns (x, y) variables for each charge"""

return vars_float.reshape(self.n_charges, 2)

def apply_population(self, vars_int, vars_float):

"""Returns (x, y) variables for each charge per individual"""

n_pop = len(vars_float)

return vars_float.reshape(n_pop, self.n_charges, 2)

def get_fig(self, xy):

"""Get a figure showing the charges locations"""

fig, ax = plt.subplots(figsize=(6, 6))

ax.scatter(xy[:, 0], xy[:, 1], color="orange")

ax.add_patch(plt.Circle((0, 0), self.radius, color="darkred", fill=False))

ax.set_aspect("equal", adjustable="box")

ax.set_xlabel("x")

ax.set_ylabel("y")

ax.set_title(f"N = {self.n_charges}")

return fig

There are \(2 N\) variables for this problem, and we order them as \((x_0, y_0, \ldots, x_{N-1}, y_{N-1})\). This is convenient, since the numpy reshaping operation vars_float.reshape(n_charges, 2) then represents an array of \((x_i, y_i)\) type arrays, which is handy for calculations.

Notice the two functions apply_individual and apply_population. They are being called during optimization whenever new variables are being set by the optimizer, and their purpose is to update the data in the problem class accordingly (excluding objectives and constraints, which we will deal with shortly).

The difference between the two functions is that apply_individual evaluates a single vector of new problem variables, whereas apply_population handles a full population of such vectors. The implementation of the latter function is not strictly required (alternatively a loop over individuals of the population will be evaluated), but it provides a chance for a vast speed-up by vectorized evaluation. This is particularly beneficial for genetic algorithms or other easily vectorizable

approaches (in fact also the gradient calculation via iwopy discretizations makes use of this vectorization). Of course the chosen optimizer must be able to support vectorized evaluation for this to work. Both functions return any kind of evaluation data which will be forwarded to the objective and constraint evaluation.

So far we have only defined the problem variables. Objectives and constraints can be added to the problem via the add_objective and add_constraint functions, respectively. First, we implement the inverse distance potential as our objective:

In [3]:

class MinPotential(Objective):

def __init__(self, problem):

"""Define the same variable names and ordering as in the problem"""

super().__init__(problem, "potential", vnames_float=problem.var_names_float())

self.n_charges = problem.n_charges

def n_components(self):

"""The potential is a scalar function, hence one component"""

return 1

def maximize(self):

"""Indicates that the single component is to be minimized"""

return [False]

def calc_individual(self, vars_int, vars_float, problem_results, components=None):

"""This evaluates the potential. See problem.apply_individual

for problem results"""

xy = problem_results

value = 0.0

for i in range(1, self.n_charges):

dist = np.maximum(np.linalg.norm(xy[i - 1, None] - xy[i:], axis=-1), 1e-10)

value += 2 * np.sum(1 / dist)

return value

def calc_population(self, vars_int, vars_float, problem_results, components=None):

"""This evaluates the potential in vectorized manner.

See problem.apply_population for problem results"""

xy = problem_results

n_pop = len(xy)

value = np.zeros((n_pop, 1))

for i in range(1, self.n_charges):

dist = np.maximum(

np.linalg.norm(xy[:, i - 1, None] - xy[:, i:], axis=-1), 1e-10

)

value[:, 0] += 2 * np.sum(1 / dist, axis=1)

return value

Notice that the functions calc_individual and its (again optional) vectorized correspondent calc_population do not directly make use of the variables vector vars_float, which they could (and it would be perfectly fine, even intended), but instead of the problem_results provided by the problem functions apply_individual and apply_population.

Next, we implement the \(N\) radial constraints:

In [4]:

class MaxRadius(Constraint):

def __init__(self, problem, tol=1e-2):

"""Define the same variable names and ordering as in the problem"""

super().__init__(

problem, "radius", vnames_float=problem.var_names_float(), tol=tol

)

self.n_charges = problem.n_charges

self.radius = problem.radius

def n_components(self):

"""One constraint per charge"""

return self.n_charges

def vardeps_float(self):

"""Boolean array that defines which component depends

on which variable (optional, default is on all)"""

deps = np.zeros((self.n_components(), self.n_charges, 2), dtype=bool)

np.fill_diagonal(deps[..., 0], True)

np.fill_diagonal(deps[..., 1], True)

return deps.reshape(self.n_components(), 2 * self.n_charges)

def calc_individual(self, vars_int, vars_float, problem_results, components=None):

"""This evaluates the constraints, negative values are valid.

See problem.apply_individual for problem results"""

components = np.s_[:] if components is None else components

xy = problem_results[components]

r = np.linalg.norm(xy, axis=-1)

return r - self.radius

def calc_population(self, vars_int, vars_float, problem_results, components=None):

"""This evaluates the constraints in vectorized manner,

negative values are valid. See problem.apply_population for

problem results"""

components = np.s_[:] if components is None else components

xy = problem_results[:, components]

r = np.linalg.norm(xy, axis=-1)

return r - self.radius

Note that by default, negative constraint values represent validity. This can be changed by overloading the function get_bounds of the base class, see API, but is not recommended.

Also note that the function vardeps_float is only relevant for gradient based solvers that support gradient sparsity (e.g. pygmo.ipopt). It is irrelevant for the genetic algorithm that we want to use in this example, but added for the sake of completeness.

Now let’s add the objective and the constraints and initialize the problem:

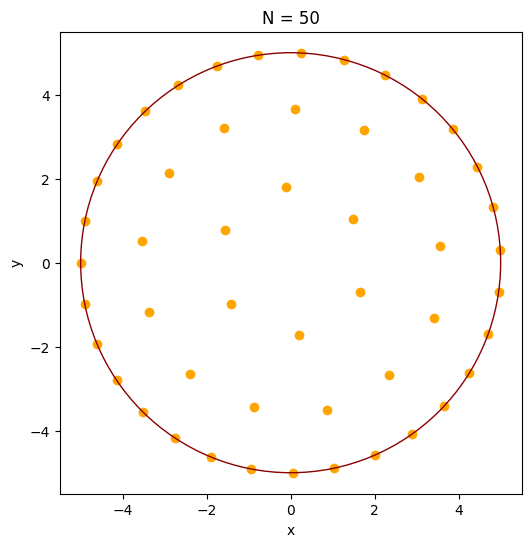

In [5]:

N = 20

R = 5

# generate random initial coordinates of the N charges:

xy = np.random.uniform(-R / 10.0, R / 10.0, (N, 2))

problem = ChargesProblem(xy, R)

problem.add_objective(MinPotential(problem))

problem.add_constraint(MaxRadius(problem))

problem.initialize()

fig = problem.get_fig(xy)

plt.show()

Problem 'charges_problem' (ChargesProblem): Initializing

--------------------------------------------------------

n_vars_int : 0

n_vars_float: 40

--------------------------------------------------------

n_objectives: 1

n_obj_cmptns: 1

--------------------------------------------------------

n_constraints: 1

n_con_cmptns: 20

--------------------------------------------------------

Finally, we can now setup the genetic algorithm GA from pymoo and solve the problem, here in vectorized form (flag vectorize=True):

In [6]:

solver = Optimizer_pymoo(

problem,

problem_pars=dict(

vectorize=True,

),

algo_pars=dict(

type="GA",

pop_size=100,

seed=1,

),

setup_pars=dict(),

term_pars=dict(

type="default",

n_max_gen=300,

ftol=1e-6,

xtol=1e-6,

),

)

solver.initialize()

solver.print_info()

results = solver.solve(verbosity=0)

solver.finalize(results)

Loading pymoo

pymoo successfully loaded

Initializing Optimizer_pymoo

Selecting sampling: float_random (FloatRandomSampling)

Selecting algorithm: GA (GA)

Selecting termination: default (DefaultSingleObjectiveTermination)

Problem:

--------

vectorize: True

Algorithm:

----------

type: GA

pop_size: 100

seed: 1

Termination:

------------

n_max_gen: 300

ftol: 1e-06

xtol: 1e-06

Optimizer_pymoo: Optimization run finished

Success: True

Best potential = 78.69922244245727

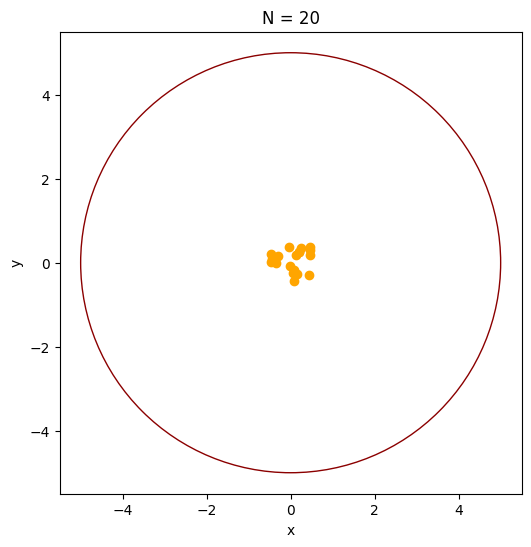

In [7]:

print(results)

xy = results.problem_results

fig = problem.get_fig(xy)

plt.show()

Results problem 'charges_problem':

-----------------------------------

Float variables:

0: x0 = 2.236112e+00

1: y0 = -4.470885e+00

2: x1 = 2.082072e+00

3: y1 = 7.199634e-01

4: x2 = 4.258628e+00

5: y2 = 2.615461e+00

6: x3 = 4.931458e+00

7: y3 = 8.239395e-01

8: x4 = -3.453717e+00

9: y4 = -3.615375e+00

10: x5 = -2.459065e+00

11: y5 = 4.351725e+00

12: x6 = -6.527966e-01

13: y6 = 2.161302e+00

14: x7 = 1.670812e+00

15: y7 = -2.382281e+00

16: x8 = -1.235636e+00

17: y8 = -1.913568e+00

18: x9 = -2.099603e+00

19: y9 = -4.536876e+00

20: x10 = -4.701957e+00

21: y10 = -1.697765e+00

22: x11 = 1.517358e+00

23: y11 = 4.762982e+00

24: x12 = 4.845131e+00

25: y12 = -1.233574e+00

26: x13 = 4.140025e+00

27: y13 = -2.796535e+00

28: x14 = 2.992287e+00

29: y14 = 4.004978e+00

30: x15 = 2.484520e-02

31: y15 = -4.999799e+00

32: x16 = -4.887334e+00

33: y16 = 1.053824e+00

34: x17 = -3.199172e+00

35: y17 = 1.417813e-01

36: x18 = -3.795050e+00

37: y18 = 3.249910e+00

38: x19 = -4.806220e-01

39: y19 = 4.976805e+00

-----------------------------------

Objectives:

0: potential = 7.869922e+01

-----------------------------------

Constraints:

0: radius_0 = -1.098864e-03

1: radius_1 = -2.796963e+00

2: radius_2 = -2.345300e-03

3: radius_3 = -1.849789e-04

4: radius_4 = -8.972586e-05

5: radius_5 = -1.548850e-03

6: radius_6 = -2.742264e+00

7: radius_7 = -2.090210e+00

8: radius_8 = -2.722163e+00

9: radius_9 = -8.424672e-04

10: radius_10 = -9.192331e-04

11: radius_11 = -1.163198e-03

12: radius_12 = -3.001922e-04

13: radius_13 = -3.960526e-03

14: radius_14 = -6.371277e-04

15: radius_15 = -1.389087e-04

16: radius_16 = -3.424350e-04

17: radius_17 = -1.797687e+00

18: radius_18 = -3.568959e-03

19: radius_19 = -4.112099e-05

-----------------------------------

Success: True

-----------------------------------

Note that this problem has many equivalent solutions, and the displayed result is the best solution that the genetic algorithm was able to find within its convergence criteria. With heuristic methods there is generally no guarantee or proof that a global optimum has been found. However, the objective function has clearly been minimized, and therefore the “design” was improved which is often the goal in engineering tasks.

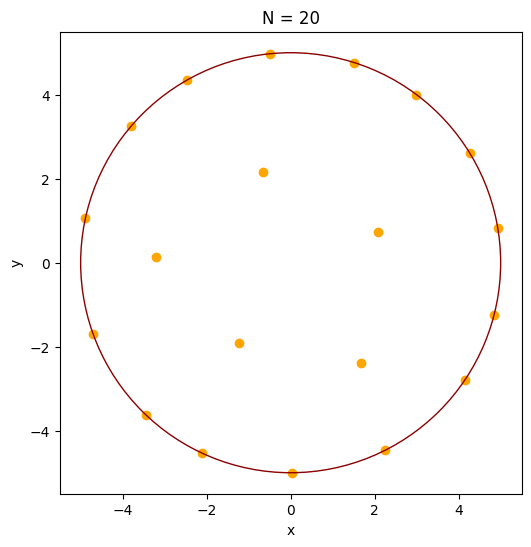

For the fun of it, let’s also solve the problem using iwopy’s GG solver (stands for Greedy Gradient), now for 50 charges. This solver is a simple steepest decent algorithm which projects out directions that would violate constraints (and reverts those that already do). It supports iwopy’s vectorization capabilities and hence can run fast for many variables. Of course it is not as advanced as other gradient based solvers, but for this particular problem it does a good job:

In [8]:

from iwopy import LocalFD

from iwopy.optimizers import GG

In [9]:

N = 50

R = 5

xy = np.random.uniform(-R / 10.0, R / 10.0, (N, 2))

problem = ChargesProblem(xy, R)

problem.add_objective(MinPotential(problem))

problem.add_constraint(MaxRadius(problem))

gproblem = LocalFD(problem, deltas=1e-2, fd_order=1)

gproblem.initialize()

solver = GG(

gproblem,

step_max=0.1,

step_min=1e-4,

vectorized=True,

)

solver.initialize()

results = solver.solve(verbosity=0)

solver.finalize(results)

print(results)

Problem 'charges_problem' (ChargesProblem): Initializing

--------------------------------------------------------

n_vars_int : 0

n_vars_float: 100

--------------------------------------------------------

n_objectives: 1

n_obj_cmptns: 1

--------------------------------------------------------

n_constraints: 1

n_con_cmptns: 50

--------------------------------------------------------

Problem 'charges_problem_fd' (LocalFD): Initializing

----------------------------------------------------

n_vars_int : 0

n_vars_float: 100

----------------------------------------------------

n_objectives: 1

n_obj_cmptns: 1

----------------------------------------------------

n_constraints: 1

n_con_cmptns: 50

----------------------------------------------------

GG: Optimization run finished

Success: True

Best potential = 585.5754719667092

Results problem 'charges_problem_fd':

--------------------------------------

Float variables:

0: x0 = 1.087311e-01

1: y0 = 3.652947e+00

2: x1 = -4.606218e+00

3: y1 = 1.944930e+00

4: x2 = -1.887607e+00

5: y2 = -4.630003e+00

6: x3 = 3.562191e+00

7: y3 = 4.047046e-01

8: x4 = 1.485097e+00

9: y4 = 1.052208e+00

10: x5 = 3.858055e+00

11: y5 = 3.180473e+00

12: x6 = -4.141494e+00

13: y6 = -2.801432e+00

14: x7 = -4.122978e+00

15: y7 = 2.828613e+00

16: x8 = -8.834537e-01

17: y8 = -3.429528e+00

18: x9 = 2.007586e+00

19: y9 = -4.579257e+00

20: x10 = -3.463435e+00

21: y10 = 3.606192e+00

22: x11 = 1.932276e-01

23: y11 = -1.712766e+00

24: x12 = -1.579416e+00

25: y12 = 3.213613e+00

26: x13 = 1.743332e+00

27: y13 = 3.151292e+00

28: x14 = -1.420385e+00

29: y14 = -9.827574e-01

30: x15 = 3.402227e+00

31: y15 = -1.309271e+00

32: x16 = -3.366573e+00

33: y16 = -1.166486e+00

34: x17 = -4.900849e+00

35: y17 = -9.907955e-01

36: x18 = 2.878439e+00

37: y18 = -4.088348e+00

38: x19 = 1.042442e+00

39: y19 = -4.890124e+00

40: x20 = 3.120221e+00

41: y20 = 3.906946e+00

42: x21 = 4.444495e+00

43: y21 = 2.290517e+00

44: x22 = 3.645875e+00

45: y22 = -3.421637e+00

46: x23 = 2.536083e-01

47: y23 = 4.993564e+00

48: x24 = 3.064096e+00

49: y24 = 2.047608e+00

50: x25 = 4.740609e-02

51: y25 = -4.999775e+00

52: x26 = -3.516980e+00

53: y26 = -3.553991e+00

54: x27 = -7.810981e-01

55: y27 = 4.938612e+00

56: x28 = -4.607438e+00

57: y28 = -1.942042e+00

58: x29 = 1.275371e+00

59: y29 = 4.834607e+00

60: x30 = 4.255719e+00

61: y30 = -2.624664e+00

62: x31 = -1.127440e-01

63: y31 = 1.802090e+00

64: x32 = -2.673215e+00

65: y32 = 4.225390e+00

66: x33 = -2.750043e+00

67: y33 = -4.175796e+00

68: x34 = 2.240040e+00

69: y34 = 4.470148e+00

70: x35 = -2.396197e+00

71: y35 = -2.639390e+00

72: x36 = -2.902047e+00

73: y36 = 2.146349e+00

74: x37 = 2.344344e+00

75: y37 = -2.683691e+00

76: x38 = 4.819316e+00

77: y38 = 1.331988e+00

78: x39 = -3.530091e+00

79: y39 = 5.240002e-01

80: x40 = 4.990310e+00

81: y40 = 3.111336e-01

82: x41 = -9.358615e-01

83: y41 = -4.911636e+00

84: x42 = 4.702197e+00

85: y42 = -1.699806e+00

86: x43 = 8.549782e-01

87: y43 = -3.505766e+00

88: x44 = -4.999989e+00

89: y44 = -1.061685e-02

90: x45 = -4.900949e+00

91: y45 = 9.903010e-01

92: x46 = 4.949815e+00

93: y46 = -7.066317e-01

94: x47 = 1.642586e+00

95: y47 = -7.069032e-01

96: x48 = -1.757321e+00

97: y48 = 4.681007e+00

98: x49 = -1.552179e+00

99: y49 = 7.801572e-01

--------------------------------------

Objectives:

0: potential = 5.855755e+02

--------------------------------------

Constraints:

0: radius_0 = -1.345435e+00

1: radius_1 = 1.247216e-07

2: radius_2 = -6.293392e-07

3: radius_3 = -1.414893e+00

4: radius_4 = -3.179930e+00

5: radius_5 = -6.227472e-10

6: radius_6 = -3.288465e-07

7: radius_7 = -4.991725e-08

8: radius_8 = -1.458509e+00

9: radius_9 = -1.129005e-07

10: radius_10 = 1.821135e-08

11: radius_11 = -3.276369e+00

12: radius_12 = -1.419237e+00

13: radius_13 = -1.398633e+00

14: radius_14 = -3.272775e+00

15: radius_15 = -1.354546e+00

16: radius_16 = -1.437065e+00

17: radius_17 = -5.599746e-07

18: radius_18 = 4.179612e-08

19: radius_19 = 2.246986e-07

20: radius_20 = 1.327298e-09

21: radius_21 = 4.092386e-09

22: radius_22 = -2.982356e-08

23: radius_23 = 2.657750e-09

24: radius_24 = -1.314707e+00

25: radius_25 = -4.232622e-07

26: radius_26 = 1.408324e-07

27: radius_27 = -9.170680e-09

28: radius_28 = 7.811203e-07

29: radius_29 = 5.608240e-09

30: radius_30 = 7.925003e-09

31: radius_31 = -3.194387e+00

32: radius_32 = -2.086939e-08

33: radius_33 = 6.383923e-07

34: radius_34 = 4.152908e-09

35: radius_35 = -1.435152e+00

36: radius_36 = -1.390472e+00

37: radius_37 = -1.436554e+00

38: radius_38 = 7.981631e-09

39: radius_39 = -1.431231e+00

40: radius_40 = 6.595215e-09

41: radius_41 = 6.953370e-07

42: radius_42 = -5.153245e-09

43: radius_43 = -1.391485e+00

44: radius_44 = 4.658783e-07

45: radius_45 = -2.144952e-07

46: radius_46 = 9.729153e-09

47: radius_47 = -3.211760e+00

48: radius_48 = 7.765166e-10

49: radius_49 = -3.262789e+00

--------------------------------------

Success: True

--------------------------------------

In [10]:

xy = results.problem_results

fig = problem.get_fig(xy)

plt.show()